PipelineDB: Debugging, Auditing, and Replaying Machine Learning and Data Processing Workflows

Building on our work on ModelDB, PipelineDB is a system to help users log, replay, and debug their machine learning and data science pipelines.

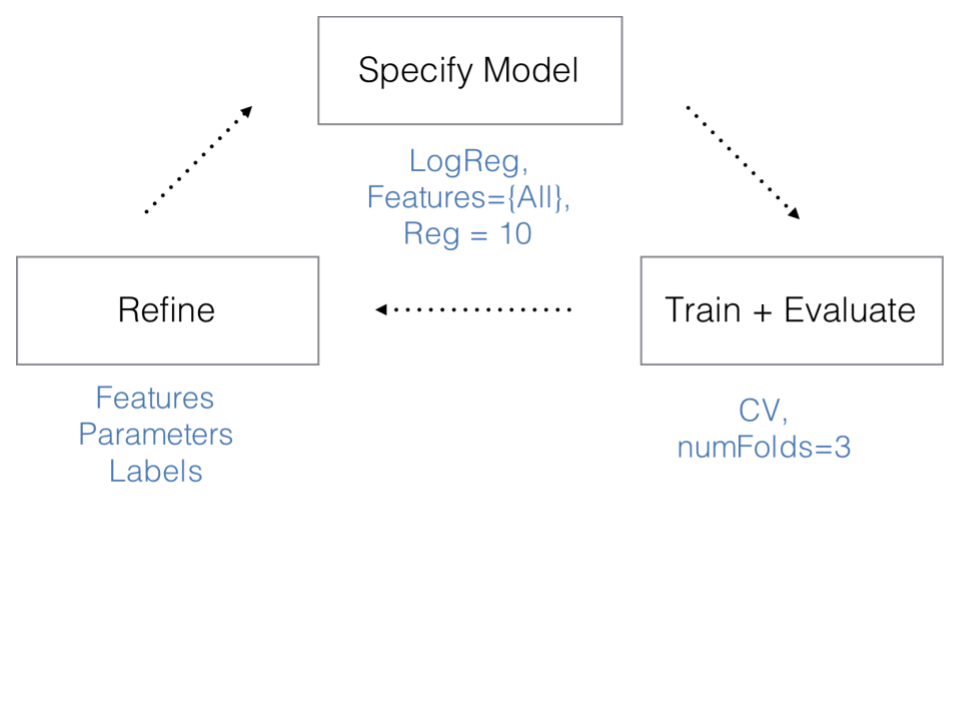

Our key observation is that machine learning is an iterative process that involves specifying a model (i.e., a set of features and parameters) along with an input training data set, evaluating the model on the inputs, and then refining the model to further improve its performance.

This iterative process is illustrated in Figure 1.

Figure 1: Iterative Machine Learning Process

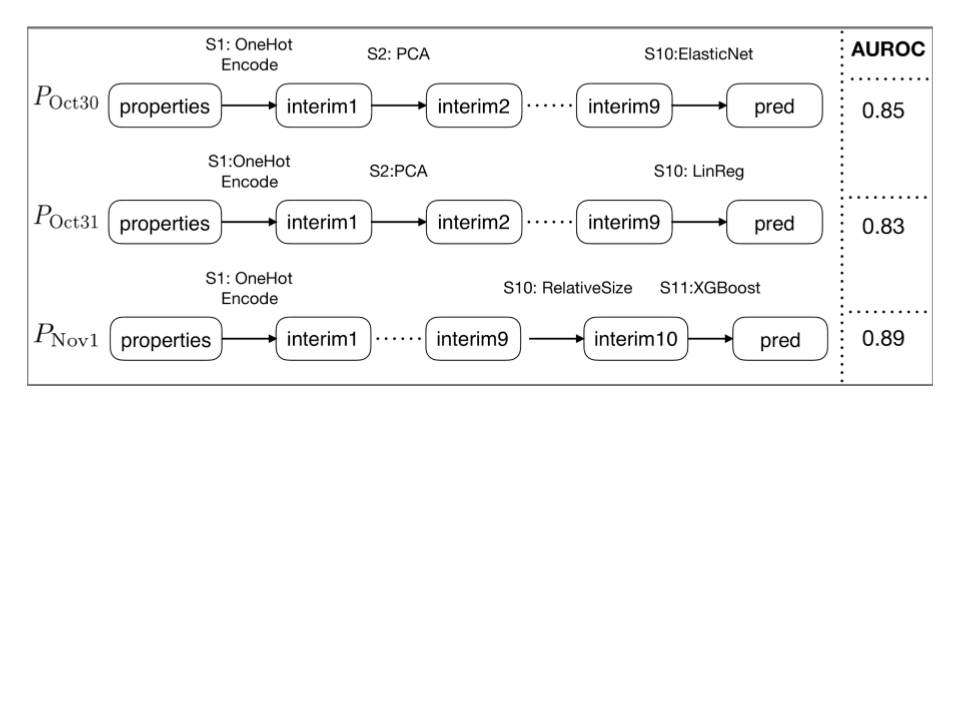

The key challenge we address in PipelineDB is that there is no established way for data scientists to manage this iterative process, which may span thousands of models built over many months for a single modeling task (e.g., a model predicting which movies a user will like or which ads they will click on.) Typically these models are expressed as pipelines: sequences of transformations to data, each of which produces one or more intermediate data products. An example is shown in Figure 2.

Figure 2: Typical Machine Learning Pipelines. Here, three pipelines are shown, each with a variety of intermediate states and their overall performance (here, AUROC)

The lack of good support leads to many problems, including:

- There's limited support for relating model performance to various features or hyperparameters, or for tracking model performance over time. Some dashboards do exist, e.g., TensorFlow Playground and NVIDIA Digits, but they do not capture all of the intermediate state of the learned models or pipelines, limiting their utility.

- There's no easy way for a data scientist to reproduce a result from a previous run. Especially months after a model is built, reconstructing the exact parameters used for a particular training run may be difficult unless meticulous records are kept.

- There's no way to understand which data was used in training a model: for example, to ask if a particular feature or user's data was used in a model. This is particularly important with increasing requirements that modern enterprises be able to enforce regulations like the GDPR in Europe, which provides individuals with a right to be forgotten; or to identify cases where private data is accidentally leaked by a model.

- There's no way to visualize what the model is learning. For example, a data scientist may want to understand which parts of the internal hidden state (e.g., hidden layers or internal neurons in a deep net, or feature values in a conventional ML pipeline) are correlated with getting a particular test example right (or wrong).

- There's no way to compare the performance of models over time, either in terms of their performance or their internal parameters, and there is no way to transfer knowledge learned from one model or modeling exercise to another model.

PipelineDB is designed to alleviate these concerns by providing a number of components that 1) capture models as they are trained, including their intermediate state and all the predictions they make in a storage-efficient way and 2) provide visual methods to explore and navigate the recorded data. These methods include examining the internal state of models (including high-dimensional neural nets), comparing trained models and finding patterns in their performance (e.g., understanding which features or neurons are correlated with good performance on a particular input), suggesting additional model transformations that can be done to improve performance (based on using information from previously trained models), deploying trained models in a model serving framework (e.g., TensorFlow Serving), and replaying or reruning previous model runs from any point in time.

Key new technical directions include:

(1) An interactive pipeline debugger that supports a zoom-in, zoom-out graphical view of the network, including visualizations of tensors and weights, as well as raw data, and the ability to halt, adjust, and restart the network from a selected point or from predefined save-points.

(2) A visual pipeline explanation and comparison module designed to help non-expert users of ML models understand what the model is doing and compare their model to other models built on the same data sets.

(3) Various outlier detection methods that, for example, detect when models are being applied to test data sets different from the data they were trained on, or identify classes of inputs where a given model or pipeline is underperforming.

(4) A pipeline sharing and training module that uses previously trained models and hyperparameters on similar data to seed/initialize the best parameters for new models that users are building.

Citations

Manasi Vartak, Harihar Subramanyam, Wei-En Lee, Srinidhi Viswanathan, Saadiyah Husnoo, Samuel Madden and Matei Zaharia. 2016. MODELDB: A System for Machine Learning Model Management. HILDA 2016.

Manasi Vartak, Joana M. F. da Trindade, Samuel Madden and Matei Zaharia. 2018. MISTIQUE: A System to Store and Query Model Intermediates for Model Diagnosis. SIGMOD 2018.