Scheduler: An Opportunity for Reinforcement Learning

Today's database systems use simple scheduling policies like first-come-first-serve for their generality and ease of implementation. However, a scheduler customized for a specific workload can perform a variety of optimizations; for example, it can prioritize fast and low-cost queries, select query-specific parallelism thresholds, and order operations in query execution to avoid bottlenecks (e.g., leverage query structure to run slow stages in parallel with other non-dependent stages). Such workload-specific policies are rarely used in practice because they require expert knowledge and take significant effort to devise, implement, and validate.

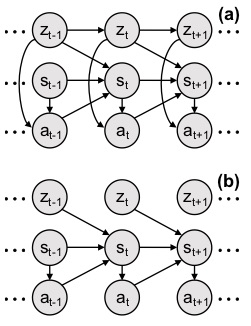

In DAS we envision a new scheduling system that automatically learns highly efficient scheduling policies tailored to the data and workload. Our system represents a scheduling algorithm as a neural network that takes as input information about the data (e.g., using a CDF model) and the query workload (e.g., using a model trained on previous executions of queries) to make scheduling decisions. We train the scheduling neural network using modern reinforcement learning (RL) techniques to optimize high-level system objectives such as minimal average query completion time.

Citations